AI:神经网络是如何工作的?

文章来自微信公众号“科文路”,欢迎关注、互动。转发须注明出处。

AI:神经网络是如何工作的?

让我们看看机器学习需要什么样的计算,特别是神经网络。

本文将翻译What makes TPUs fine-tuned for deep learning? | Google Cloud Blog中的部分内容。

Let’s see what kind of calculation is required for machine learning—specifically, neural networks.

让我们看看机器学习需要什么样的计算,特别是神经网络。

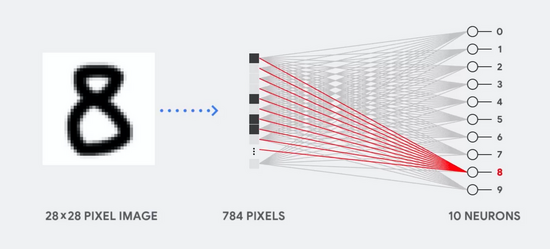

For example, imagine that we’re using single layer neural network for recognizing a hand-written digit image, as shown in the following diagram:

例如,假设我们使用单层神经网络来识别手写数字的图像,如下图所示:

If an image is a grid of 28 x 28 grayscale pixels, it could be converted to a vector with 784 values (dimensions). The neuron that recognizes a digit “8” takes those values and multiply by the parameter values (the red lines above).

如果图像是 28 x 28 灰度像素的网格,则可以将其转换为具有 784 个值(维度)的矢量。识别数字“8”的神经元将这些值和参数值相乘(上面的红线)。

The parameter works as “a filter” to extract a feature from the data that tells the similarity between the image and shape of “8”, just like this:

这些参数作为“过滤器”从数据中提取特征,从而体现图像和数字“8”的形状的相似性,其过程如图:

This is the most basic explanation of data classification by neural network. Multiplying data by their respective parameters (the coloring of dots above), and adding them all (the collected dots at right). If you get the highest result, you found the best match between input data and its corresponding parameter, and it’s most likely the correct answer.

这个就是神经网络对数据分类的最基本解释:将数据和它们各自的参数相乘(上面有颜色的点),然后将它们全部相加(右侧得到的点)。如果得到的分数最高,就意味着找到了输入数据与其对应参数之间的最佳匹配,也就很可能是正确答案。

In short, neural networks require massive amount of multiplications and additions between data and parameters. We often organize these multiplications and additions into a matrix multiplication, which you might have encountered in high-school algebra. So the problem is how you can execute large matrix multiplication as fast as possible with less power consumption.

简而言之,神经网络需要数据和参数之间的大量乘法和加法。我们经常将这些乘法和加法组织成矩阵乘法,就是你在高中代数中遇到过的那些。

所以问题就变成了如何以更少的功耗尽可能快地执行大型矩阵乘法。

都看到这儿了,不如关注每日推送的“科文路”、互动起来~

至少点个赞再走吧~

AI:神经网络是如何工作的?